Reconsidering Writing Pedagogy in the Era of ChatGPT

Lee-Ann Kastman Breuch, Kathleen Bolander, Alison Obright, Asmita Ghimire, Stuart Deets, and Jessica Remcheck

Results

Our study collected a variety of data types; combined, they paint a complex picture of how students understood ChatGPT. Students were impressed with ChatGPT but also had several questions and critiques about it. This section shares summaries of quantitative ratings and qualitative student comments about ChatGPT output. In addition, we report “filters” that emerged from our qualitative coding process.

Student Ratings of ChatGPT Texts

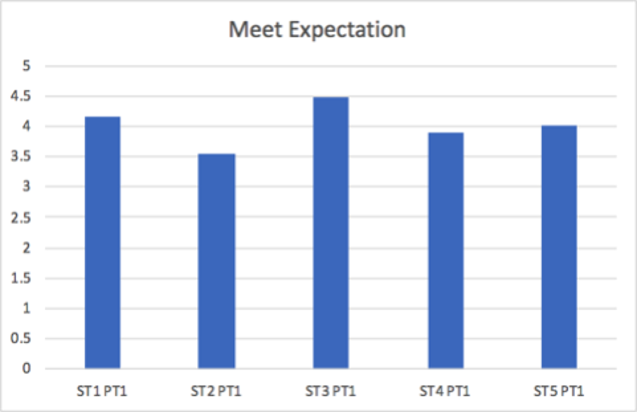

Quantitative data in our usability test occurred in the form of student ratings of ChatGPT texts, which we gathered through multiple questions. For each of five ChatGPT texts, we asked students to rate on a scale of 1-5 (5 being positive and 1 being negative) whether the texts met their expectations, their level of satisfaction, whether they would use the text unaltered, and whether they would find ChatGPT texts useful in the process of writing. One question asked students to rate how well ChatGPT texts met their expectations on a scale of 1-5, with 1 being not very well and 5 being extremely well. As Figure 2 shows, student participants overall noted that ChatGPT texts met their expectations, with average ratings ranging from 3.5 to 4.5. Students consistently reported that ChatGPT generally met (and often exceeded) their expectations. Comments shared by students noted being impressed by the speed with which ChatGPT produced texts, the content, ideas, and, frequently, a clear organization of ideas. This question often reflected the first impression of ChatGPT that students had. Note that Figure 2 shows average ratings across 32 participants for each of the five tasks in the usability test. Student expectations did vary somewhat among the five ChatGPT tasks, but generally, students indicated that ChatGPT met their expectations.

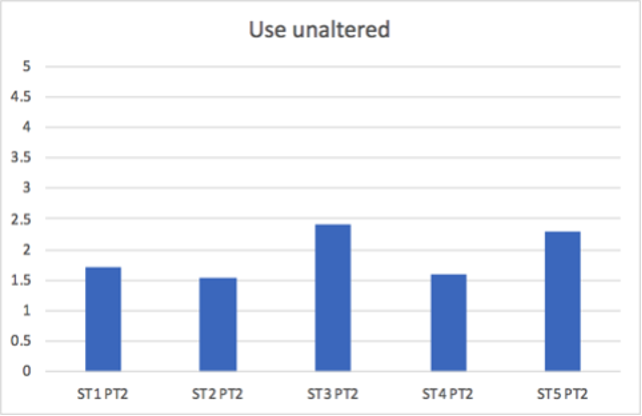

Results on the question of satisfaction were similar. Students were asked to rate their satisfaction with each ChatGPT text on a scale of 1 to 5 with 1 being not very satisfied and 5 being very satisfied. As Figure 3 shows, all students rated their satisfaction above a 3 on a 5-point scale. The ratings varied again by ChatGPT text, but the overall range of satisfaction was similar to the question about expectations; students were more or less satisfied with the texts produced by ChatGPT.

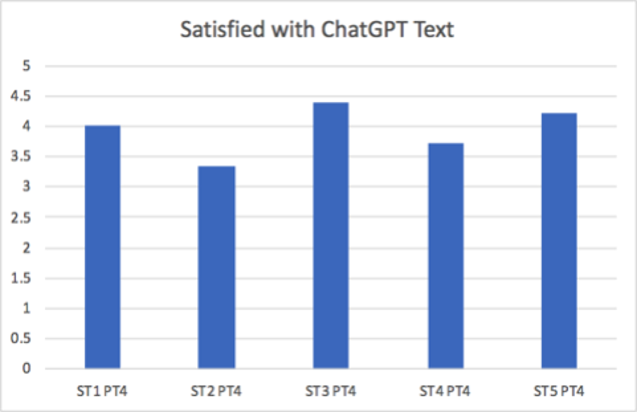

An important aspect of this study was to learn more about whether or not students might use ChatGPT text unaltered as their academic homework. This question was asked because of ethical concerns that had been circling ChatGPT use. We wanted to learn how students thought about this question, specifically in response to texts they observed during the usability test. As Figure 4 shows, students responded that they were not likely to use ChatGPT texts unaltered as their academic homework. Students provided comments that said they might want to change the text, add their own voice or information, further develop the text, or verify the accuracy of ChatGPT.

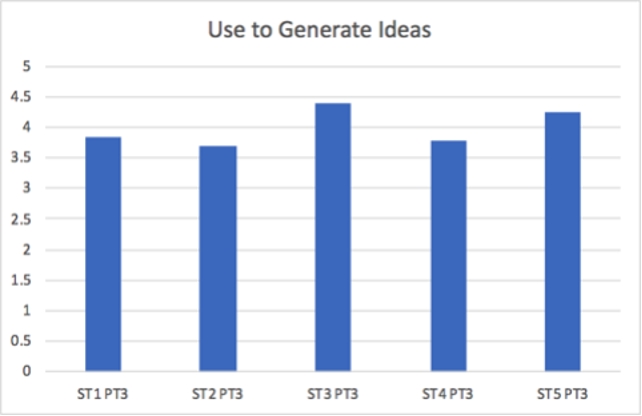

However, when asked about the likelihood of using ChatGPT texts to generate ideas, content, or other parts of the writing process, students were much more enthusiastic. Figure 5 shows a more positive response to ChatGPT for each of the five texts in the usability test. Average ratings varied between 3.5 and 4.5.

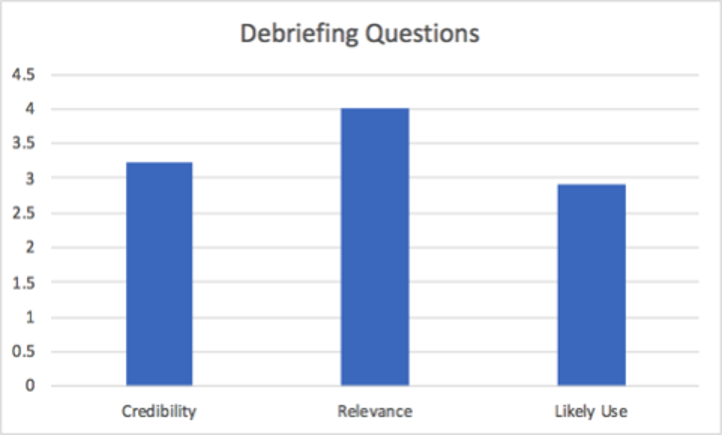

During a debriefing session, we asked students to share their overall impressions of ChatGPT texts in terms of credibility, relevance, and likeliness of use. As Figure 6 shows, students rated the relevance of ChatGPT texts higher than its credibility. This perception was also supported by student comments, where students found ChatGPT texts to mention contents that seemed aligned well with the prompts but had doubts about credibility of information from ChatGPT. As one student said, “I don’t know where ChatGPT got this information.” Similar comments were shared from student participants about uncertainty of information sources in the ChatGPT texts.

Results from Product Reaction Cards

As part of debriefing interviews, we asked participants to describe their experience with ChatGPT in the usability session using five different adjectives. Participants were each given the same list of adjectives, which is also known as “Product Reaction Cards,” a technique created by IBM labs consisting of 118 words to comprise a “desirability matrix.” In our ChatGPT usability evaluations, students reviewed this list and each chose five words that they felt best described their experience. Out of this list, 64 words appeared among student choices, and 34 words were chosen by more than one student.

Students more frequently chose positive words to describe their experience such as “time-saving” (12), “fast” (11), “easy-to-use” (10), “efficient” (8), “convenient” (8), and “innovative” (6). Some words carried negative sentiment, but they were selected less frequently such as “intimidating” (1), “incomprehensible” (1), “frustrating” (1), “unusable” (1), “sterile” (1), “poor quality” (1), and “inaccurate” (1). The word cloud in Figure 7 visually demonstrates the student reactions, in which larger words demonstrate a higher frequency of use. Combined, these words provide a glimpse into student understanding. Students were impressed by ChatGPT functionality and selected words that reflected the mechanistic capabilities of ChatGPT.