Reconsidering Writing Pedagogy in the Era of ChatGPT

Lee-Ann Kastman Breuch, Kathleen Bolander, Alison Obright, Asmita Ghimire, Stuart Deets, and Jessica Remcheck

Study Methods

Given this backdrop, we were curious about what undergraduate students thought about ChatGPT. Our study utilized a combination of usability testing and contextual interviews. We asked all participants to complete a set of five ChatGPT tasks from which we gathered quantitative and qualitative data. Following these tasks, we asked a number of contextual interview questions to learn more about student reactions to ChatGPT as a tool for academic writing. In sum, students were invited to complete writing tasks, read ChatGPT responses, and provide quantitative and qualitative comments about what they read. Our study was guided by the following research questions:

- How are undergraduate students understanding ChatGPT as an academic writing tool?

- To what extent are students incorporating ChatGPT into their writing product(s)?

- How are students thinking about ChatGPT in their writing process?

In designating an academic writing focus, our prompts were limited to verbal writing tasks; prompts did not include content related to coding assistance, problem sets, or other types of multimodal writing activities. We recruited students through email message invitations made through the Undergraduate Office of the College of Liberal Arts, Department of Writing Studies, and messages to students from instructors in writing classes. The study was approved by the Institutional Review Board and was granted exemption (STUDY00018525).

Our research team conducted usability sessions virtually using Zoom video conferencing software with individual student participants. All student participants were given a consent form which included permission to record the usability session. During the session, one moderator would lead the usability session and give the participant remote control access to a ChatGPT session accessed through an account managed by the research team. We made this decision to control access in order to minimize the risk that participants would have to share personal information with an outside organization. In addition to the moderator, additional team members were present to observe and take notes during the session. Each session included an introduction and overview of the study, an explanation of consent and confidentiality, completion of a background questionnaire, completion of five writing tasks using ChatGPT with rating and open comment questions, a product reaction card exercise where students selected five words to describe their experience, and a debriefing interview. Sessions each lasted about sixty minutes. Full descriptions of the test materials are provided in the downloadable appendix. Participants were offered a monetary incentive, a $15 gift card, for participating in the study. Initially, 49 students responded to the invitation; however, several student participants were not able to complete the session due to time conflicts. Others completed parts of the session but not the entire session. A total of 32 students completed the usability session, and participants represented 17 academic majors within the College of Liberal Arts and 5 academic majors in other programs at the university.

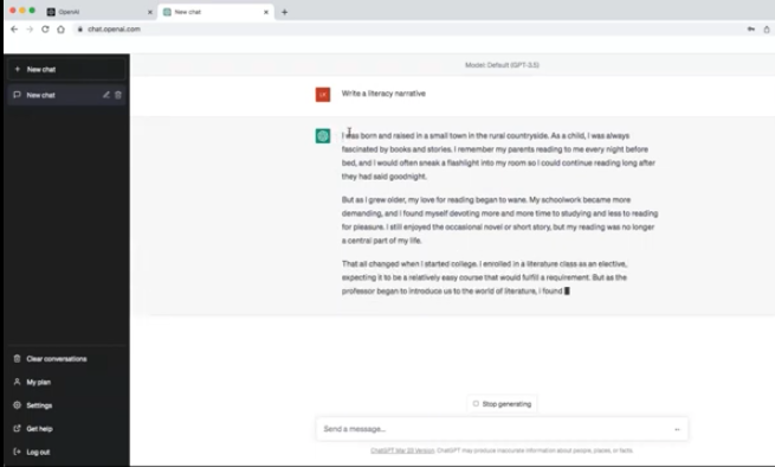

The usability sessions included five scenario tasks that were meant to simulate academic writing assignments that students may encounter in their classes, whether in a first-year writing course, a discipline-specific course, or a designated “writing-intensive” course. We recognize the inherent limitations of a usability test for completing this research, which is that students may be more or less likely to reveal their true thoughts about ChatGPT texts while in an academic setting due to their fears of academic misconduct. Participants received a scenario and a relevant prompt and were asked to type the prompt verbatim into ChatGPT. As shown in Figure 1, once entered, the prompt is placed next to a red box on the screen followed by a green box in which ChatGPT generates a response.

The scenarios and prompts are described below, and media of each scenario and task is provided:

- You have heard that ChatGPT can write essays. You decide to ask ChatGPT to “write a literacy narrative” which is a prompt you received in an undergraduate writing class for an upcoming assignment.

Video Transcript

The ChatGPT interface appears as a blank page with a user input box at the bottom. At the top of the page a dropdown indicates that it is using Default (GPT-3.5) as the model. There is no sound in this video. LK: Write a literacy narrative ChatGPT: I was born and raised in a small town in the rural countryside. As a child, I was always fascinated by books and stories. I remember my parents reading to me every night before bed, and I would often sneak a flashlight into my room so I could continue reading long after they had said goodnight.

But as I grew older, my love for reading began to wane. My schoolwork became more demanding, and I found myself devoting more and more time to studying and less to reading for pleasure. I still enjoyed the occasional novel or short story, but my reading was no longer a central part of my life.

That all changed when I started college. I enrolled in a literature class as an elective, expecting it to be a relatively easy course that would fulfill a requirement. But as the professor began to introduce us to the world of literature, I found myself drawn in. I read novels and poems that I never would have picked up on my own, and I discovered a whole new world of ideas and perspectives.

As the semester progressed, I found myself reading more and more outside of class. I devoured novels by Jane Austen, Virginia Woolf, and Toni Morrison, and I spent countless hours in the campus library, poring over literary journals and anthologies.

But it wasn't just the books that I fell in love with - it was the act of reading itself. I began to appreciate the quiet moments that reading provided, the chance to escape from the world for a little while and lose myself in someone else's story. I found that reading made me a better writer, too, as I learned to appreciate the nuances of language and style that distinguished great literature from the mediocre.

Now, years later, I still read voraciously. I carry a book with me wherever I go, and I always have a stack of novels waiting on my bedside table. Reading has become a central part of who l am, and I'm grateful for the way it has enriched my life. I may have lost my love for reading for a time, but I'm glad I found it again. - You are writing a research paper for an undergraduate class and decide to ask ChatGPT to “summarize research about the use of computers in higher education between 1970 and 2020.”

Video Transcript

The ChatGPT interface appears as a blank page with a user input box at the bottom. At the top of the page a dropdown indicates that it is using Default (GPT-3.5) as the model. There is no sound in this video. LK: Summarize research about the use of computers in higher education between 1970 and 2020. ChatGPT: Between 1970 and 2020, there has beeen a significant increase in the use of computers in higher education. In the 1970s, computers were mainly used for administrative purposes, such as record-keeping and scheduling. In the 1980s and 1990s, the use of computers expanded to include instructional applications such as computer-assisted instruction and simulations.

During the 2000s, the use of computers in higher education continued to grow, with the development of online learning and the widespread use of learning management systems (LMS) such as Blackboard and Moodle. This allowed for the delivery of courses entirely online or as a hybrid of online and traditional classroom instruction.

More recently, in the 2010s, the use of computers in higher education has shifted towards the incorporation of emerging technologies, such as virtual and augmented reality, mobile learning, and artificial intelligence. These technologies have the potential to enhance the learning experience and provide more personalized instruction.

Overall, the use of computers in higher education has had a significant impact on the way courses are taught and delivered, with the potential to improve student outcomes and increase access to education. - Provide a list of best practices in health writing.

Video Transcript

The ChatGPT interface appears as a blank page with a user input box at the bottom. At the top of the page a dropdown indicates that it is using Default (GPT-3.5) as the model. There is no sound in this video. LK: Provide a list of best practices in health writing ChatGPT: - Understand your audience: It is important to know who your target audience is before you start writing. Whether it's healthcare professionals or patients, understanding their needs and level of knowledge will help you tailor your message to them.

- Use clear and concise language: Use simple language and avoid technical jargon or complex medical terms. Your writing should be easy to read and understand, regardless of your audience.

- Be accurate and credible: Health information is serious business, so it's important to ensure that your information is accurate and up-to-date. Use reliable sources and avoid making false claims or providing misleading information.

- Use visuals: Visual aids such as infographics, images, and videos can help to make complex health information more accessible and understandable.

- Avoid scare tactics: While it's important to emphasize the impertance of health, avoid using scare tactics to get your message across. This can cause unnecessary anxiety and stress in your readers.

- Provide actionable advice: Give your readers practical advice that they can use to improve their health. Whether it's tips for healthy eating or advice on how to quit smoking, actionable advice can help your readers take control of their health.

- Use stories and anecdotes: Stories and anecdotes can help to humanize your writing and make it more relatable to your readers. Personal stories can also help to inspire and motivate your readers.

- Provide references and sources: Cite your sources and provide references where necessary. This will help to establish your credibility and allow readers to follow up on the information you've provided.

- Avoid medical advice: Unless you're a qualified healthcare professional, avoid giving medical advice. Stick to providing information and advice on lifestyle changes that can help to improve health.

- Respect privacy: Always respect the privacy of individuals when discussing health issues. Avoid sharing personal information or using real names without permission.

- Enter a prompt in ChatGPT that is similar to a paper topic that would be common and/or expected in your major.

Video Transcript

The ChatGPT interface appears as a blank page with a user input box at the bottom. At the top of the page a dropdown indicates that it is using Default (GPT-3.5) as the model. There is no sound in this video. LK: Write an absract (150-250) words describing an experiment on levels of reflection impacting generosity. Suppose you found that a high level of reflection causes participants to be significantly more generous than those who do not participate in a high level of reflection. ChatGPT: The present experiment aimed to investigate the impact of levels of reflection on generosity. The study recruited 100 participants from a university community and randomly assigned them to either a high or low reflection condition. Participants in the high reflection group were asked to spend 10 minutes reflecting on their personal values and how these values could guide their actions towards others. In contrast, participants in the low reflection group were simply asked to complete a control task that did not involve reflection.

Following the reflection task, participants were asked to play a one-shot dictator game in which they were given $10 to split between themselves and an anonymous recipient. Results revealed that participants in the high reflection group were significantly more generous than those in the low reflection group, as they allocated a larger proportion of the money to the recipient.

These findings suggest that engaging in a high level of reflection on one's values and their implications for behavior can increase generosity towards others. Implications of these findings may have practical implications for interventions aimed at promoting prosocial behavior, such as encouraging individuals to engage in reflective exercises that highlight the importance of helping others and acting in line with one's values. - Analyze the role of the Jesuits in China in the 16th-18th centuries, particularly in regard to the technology of the telescope. Include as much detail as possible and use citations in Chicago Style.

Video Transcript

The ChatGPT interface appears as a blank page with a user input box at the bottom. At the top of the page a dropdown indicates that it is using Default (GPT-3.5) as the model. There is no sound in this video. LK: Analyze the role of the Jesuits in China in the 16th through 18th centuries, particularly in regard to the technology of the telescope. Include as much detail as possible and use citations in Chicago style. ChatGPT: The Jesuits played a significant role in introducing Western science and technology to China during the 16th through 18th centuries. They were a Catholic religious order founded by Ignatius of Loyola in 1534, known for their intellectual and academic pursuits. In China, the Jesuits were particularly interested in introducing European astronomy and mathematics to the Chinese, and they made significant contributions to the development of the telescope in China.

One of the most notable Jesuits who worked in China during this period was Matteo Ricci. He arrived in China in 1583 and spent the rest of his life there, learning the language and immersing himself in Chinese culture. Ricci was interested in introducing Western science to China, and he believed that this could be achieved by winning the trust of the Chinese emperor and the scholars at the Imperial Court.

Ricci's efforts were successful, and he was able to gain access to the Impefial Court. He used this opportunity to introduce European astronomy to the Chinese, and he taught the Chinese how to use the telescope. The Chinese were fascinated by the telescope, and they quickly realized its potential for observing the stars and planets. Ricci's work on the telescope was so significant that he was awarded the title "Master of the Mathematical Sciences" by the Chinese emperor in 1601.

Other Jesuits who worked in China during this period also contributed to the development of the telescope. Johann Schreck, a G $ Regenerate response a new design for the telescope... (the video cuts before the full chatgpt response appears on screen; the '$ Regenerate' is an interface element obscuring the text below).

For each scenario and prompt, participants were asked to “think out loud” and share any thoughts about the ChatGPT texts they were viewing. We then asked students to rate each ChatGPT text on a scale of 1-5 (1=low; 5=high) regarding the following questions:

- How well does the ChatGPT text meet your expectations?

- How likely would you be to use this ChatGPT text unaltered as your academic homework?

- How likely would you be to use this ChatGPT text not as unaltered but rather to generate ideas about organization, content or expression for a future draft you might write?

- How satisfied are you with this ChatGPT text?

Moreover, in a debriefing interview, we asked participants additional questions such as :

- What was your first impression of ChatGPT?

- What do you like about the ChatGPT texts produced?

- What do you not like about the ChatGPT texts produced?

- How do you rate the credibility of ChatGPT texts on a scale of 1 to 5 with 1 being low credibility and 5 being high credibility?

- How do you rate the relevance of ChatGPT texts on a scale of 1 to 5 with 1 being low relevance and 5 being high relevance?

- How likely are you to use ChatGPT in an academic class? Why or why not?

- What questions, if any, does ChatGPT raise for you?