Reconsidering Writing Pedagogy in the Era of ChatGPT

Lee-Ann Kastman Breuch, Kathleen Bolander, Alison Obright, Asmita Ghimire, Stuart Deets, and Jessica Remcheck

Qualitative Student Comments about ChatGPT

Our usability test included qualitative student comments in multiple forms. For example, we gathered “think aloud” comments from students as they read each ChatGPT text in production. In addition, we asked students to explain each of the four ratings they gave for each of the five ChatGPT texts included in the usability test. Each usability test also included eight debriefing interview questions which provided opportunities for student reflection. This collection of questions yielded 37 qualitative comments per student, and each response in the usability test was regarded as one unit of analysis. Given our complete participant pool of 32 students, our study yielded 1,184 qualitative comments.

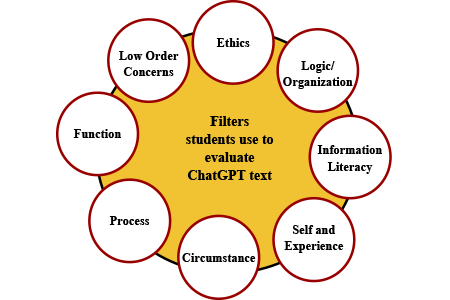

To directly address our research questions, we reduced our data set to focus specifically on qualitative comments that addressed student impressions about using ChatGPT texts, how they would use ChatGPT texts, and how ChatGPT might impact their writing process. We selected qualitative comments from four of the five usability tasks and four debriefing questions. This yielded a data set of 17 qualitative responses per student, roughly half of the total qualitative comments provided by each student, for a new total of 544 qualitative units of analysis. Given this data set, through team discussions, we started our coding by identifying 45 individual codes. Through continued discussion of the data, we sorted these codes into eight categories, which we described as “filters.” As our team member Alison Obright described, filters became a productive way to think about the lenses students used to think about ChatGPT texts. As we described these filters, we developed a codebook to guide our coding. The “filters” in our codebook ranged from “low order concerns” which included gut-level responses to texts and surface concerns, to “logic/organization” comments, in which students offered critiques of the ways ChatGPT had organized texts and arguments. Using these filters, we coded all 544 units of analysis, and each unit was coded by at least two team members to strengthen inter-rater reliability. Initial coding using the eight filters yielded strong agreement among team member pairs, and we continued to work to code the entire data set in this manner. Any coding disagreements were resolved through discussion by the team members. Data reported here reflects the final coding agreements.

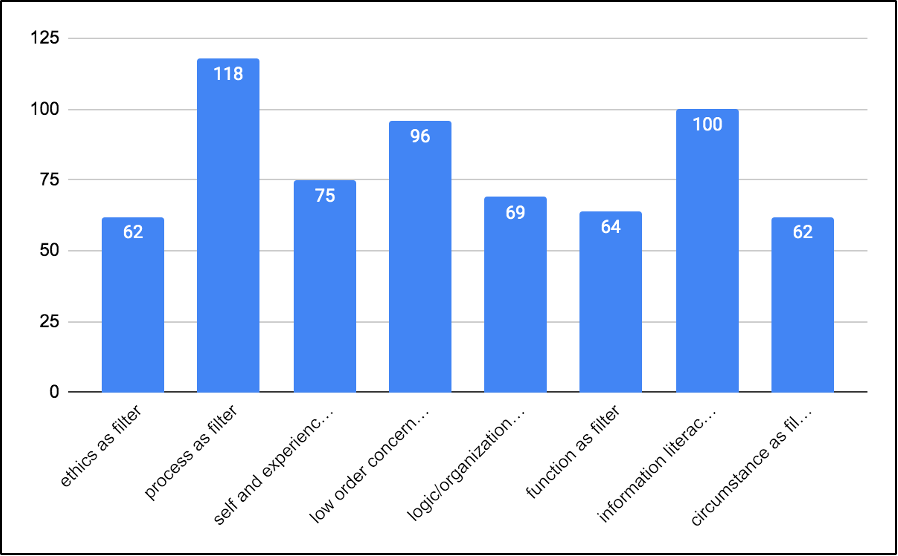

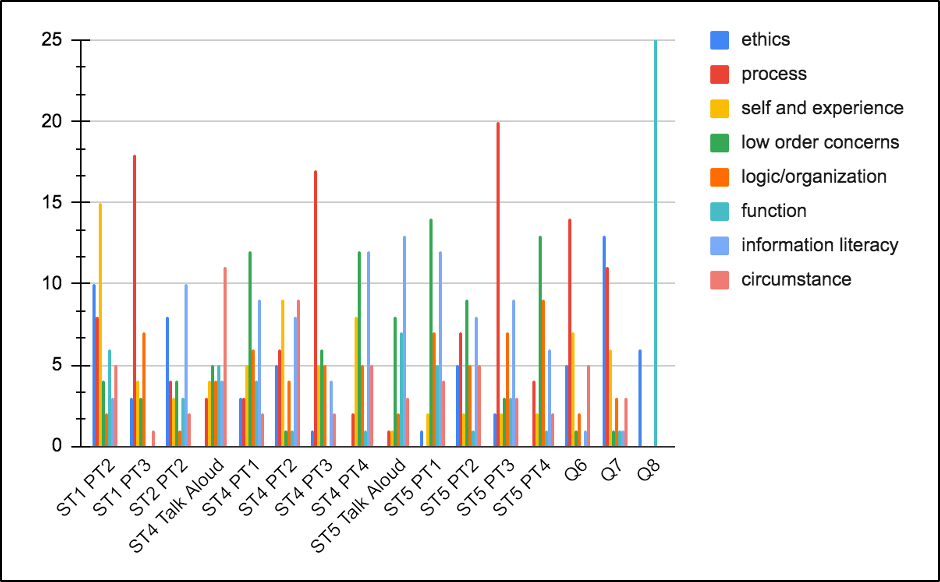

These filters reflected a range of student comments about ChatGPT as it intersected with verbal academic writing. As Figure 9 shows, in the data set we coded, the filters of “process,” “information literacy,” and “low order concerns” occurred most often. These filters were distributed differently for each of the 16 response items in the usability evaluation, as Figure 10 shows; yet the aforementioned filters were still among the most frequently coded filters. Overall, "process," "information literacy," and "low order concerns" filters occurred most frequently in our coded responses. The “function” filter also stood out as prominent to us. In the section below we describe each filter and how it appeared in our dataset.

"Process" as Filter

The most frequently coded filter in our study was “process,” a reference to stages and activities involved in academic writing tasks. "Process" also alludes to the idea that writing does not happen all at once, but rather occurs across time and often with multiple iterations (Adler-Kassner & Wardle, 2016). "Process" can be described in simplistic terms such as “prewrite, write, and rewrite” or in more rhetorical terms such as “invention, arrangement, and style,” or in other popular terms such as “prewriting, drafting, and editing.” In our study, the filter language we coded as “process” reflects ways that students described how ChatGPT can impact their writing process as they are working on a paper (see Appendix A). Students used this filter when thinking about how ChatGPT texts could “jumpstart” their writing, providing ideas or even a template. Some students described ChatGPT texts as outlines for a larger paper. Examples of student comments in this filter include:

- “I like that it kind of gave me ideas so I know how to move forward with the literacy narrative.” (Participant UU)

- “It seems like it could be really useful and like helping me structure my own, like research paper or something like that.” (Participant NN)

- “I could see myself using this for inspiration for actually designing an experiment.” (Participant L)

This filter was important because it illustrated the many ways students noted that ChatGPT might be a helpful tool in various parts of their writing processes, whether that involved getting started, organizing content, or editing a version of student writing.

"Low Order Concerns" as Filter

The second most frequently coded filter in our review of student comments was “low order concerns.” This filter language comes from writing center scholarship where low order concerns refer to “matters related to surface appearance, correctness, and standard rules of written English” (McAndrew and Reigstad, 2001). Comments that were assigned this code demonstrate how students thought about writing mechanics such as sentence length and structure, the “correctness” of writing in terms of grammar, and the voice and style of ChatGPT, including transitions between ideas and topics. This code was also used to describe uncritical or surface level comments like “it's good” or “I think the text is fine.” Examples of this filter include:

- “I don't think it's a very good essay, and I think it would be clear that you use some sort of AI near the bottom when it starts to repeat itself, it doesn't really sound natural” (Participant)

- “Instantly, I'm like really shocked because it's super long” (Participant O)

- “It’s well-written.” (Participant TT)

This category was important for us because it demonstrated how students may uncritically look at the text produced by ChatGPT. While many comments made by students about ChatGPT texts indicated deep, critical thinking about the text, many instructors have concerns about students uncritically engaging with ChatGPT in their classes. If the writing bar is only set for “well written” or “good grammar and sentence structure,” it may be easier for students to justify using the text unaltered since it meets the surface-level requirements of the assignment.

"Information Literacy" as Filter

The third most frequently coded filter was “information literacy.” Comments coded as “information literacy” may have included student comments about a lack of citations, the presence or absence of correct or accurate information, and notes about their need to present a different prompt to the technology. In short, this filter addressed critical perspectives of information, and it was important for demonstrating how students leverage information literacy skills to evaluate a text and evaluate their own request for information. Example comments in this category included:

- “It also seems pretty accurate from what I've learned in my biochem class…the one difference would be I don't really see references again.” (Participant E)

- “This is for a research paper. And so I don’t think I could honestly use any of this because I would have to cite my sources unless it’s like common knowledge, I suppose.” (Participant G)

- “...it just comes back to like me being worried about plagiarism, because I don't know how you would cite something like this, or where the information is coming from.” (Participant O)

We also discovered that comments in this category may address questions about the credibility of ChatGPT texts and trustworthiness of sources used in ChatGPT texts as a form of information literacy. This was expressed in multiple ways using words such as “proof,” “evidence,” “misleading,” “trust,” “correct,” and “citations,” as the examples below demonstrate.

- “I think I would need more proof and evidence to support this, because I don't, this could have some misleading information that might not be checked or peer reviewed so.” (Participant AA)

- “But I also wouldn't use it because I wouldn't trust the sources without looking them up first because I question if they exist, or if they're, you know, if the sources are correct. And also it doesn't look like it uses any in-text citations which I would probably want in an essay.” (Participant FF)

"Self and Experience" as Filter

The fourth most common filter was “self and experience.” Students used the "self and experience" filter when framing their reasoning around how ChatGPT impacts their own experience while using the tool. This reasoning came up when students were assessing the writing produced by ChatGPT and when thinking about the implication of turning in an academic assignment produced by AI. Many students said they like doing their own assignments, acknowledging that using ChatGPT's output is not their own work. Students using this filter were making clear points that they were either against, or hesitant to use AI generated texts because it impacted their own learning, was not their writing, or it would have other personal consequences.

Multiple students used this filter for the scenario where they asked ChatGPT to write a literacy narrative. In response to the question for the prompt of “How likely would you be to use this ChatGPT text unaltered as your academic homework?”, one student said, “I like to write my own essays from personal experience so I personally wouldn’t use computer generated text.” Another student said, “there’s a prompt for your homework so you put it in here and it writes something, but it’s not something you wrote, so I would not use that as my homework for a literacy narrative about myself.” These students are making judgements on their likelihood of using ChatGPT produced text based on the reasoning that the writing is not reflective of their personal experience.

Students were also using the "self and experience filter" in connection with the simplicity of either the text produced or the assignment in general. As a student who responded to the personal literacy narrative prompt using "self and experience" as filter stated: “Because it’s not very well written, I would not want to submit this and say this is the best work that I can produce.” Another student said, “Because that’s something that I could write very easily on my own I don’t really see why anyone would need to use ChatGPT for that.” Students were using this reasoning to assert that ChatGPT is not reflective of their own standards of work. Students thought they could produce something better on their own for an academic assignment or that it might even be easier to do it on their own versus asking AI.

The "self and experience" filter shows us that students care about ownership in their work and they see ChatGPT as infringing on this in some scenarios. Students are asserting agency and personal responsibility with their work, as well as their experience of learning, which tells us they see the value in their education.

"Logic and Organization" as Filter

The "logic and organization" filter was used to code student comments that focused on the construction and coherence of ChatGPT texts. Using this filter, students commented on the ways ChatGPT texts were constructed, the integration of details, and whether or not the ideas and arguments were fully developed in the texts. As an example, one student remarked that paragraphs were rather surface level: “It doesn’t have any substance behind the words. It’s honestly like every single one of these paragraphs could be an introductory paragraph for a paper” (Participant G). Another student commented that detail was lacking detail in paragraphs; however, some students complemented the ways ChatGPT organized ideas, noting that “it gives you an idea of how you could go about this chronologically” (Participant YY), while some students also commented that ChatGPT writing was very basic and did not reflect college level writing: “I think that structure is very basic” (Participant T). Overall, comments coded in this filter showed the ways students observed the development and order of ideas in ChatGPT texts.

"Ethics" as Filter

The "ethics" as filter code centers students' emphasis on moral implications surrounding using ChatGPT for academic assignments. In our codebook, the language used to describe this form of analysis states, “A basic form of analysis students used. This theme category captures how students used external policies to evaluate the text, and how students expressed ethical concerns about using ChatGPT text instead of their own. Comments in this filter include worries about plagiarism, privacy, and getting “caught” using the technology. "Ethics" as filter shows how students reasoned about the academic and moral consequences of submitting an AI generated text for an assignment. Through their responses, we can further understand how students are thinking through ethical and academic standards with regard to using ChatGPT. As evident in their responses, in some cases there is confidence in their convictions and in other scenarios students are finding the “right” decision to be less straightforward.

Students used this reasoning most frequently when asked the post-task question, “How likely would you be to use this ChatGPT text unaltered as your academic homework?” Many of the student responses were associating the ChatGPT texts with academic integrity by stating that it was not something they [the student] wrote, and therefore they would not turn it in. These types of responses were especially prevalent when students were asked to do the first prompt scenario: “write a literacy narrative.” The literacy narrative was the most personal prompt to the individual student and their responses align with this in terms of how they were thinking about academic integrity. One student said, “...but it’s not something you wrote, so I would not use that as my homework for a literacy narrative about myself.” Another student stated, “...if this is something I turned in, I don’t think it seems very personal.” Students were connecting the impersonal nature of the texts and reasoning about the likelihood of their audience knowing it was not produced by them, leading to entanglements with ethics and academic integrity.

Other students were calling out ethics and academic integrity in more direct ways by stating their concerns about plagiarism. One student said, “I feel like ChatGPT has a voice almost, and it sounds to me like it’s very AI generated. Like a professor receiving this, I feel like would be extremely doubtful that it’s coming from me, and not AI, since it’s been something that professors have to look out for now.”

"Function" as Filter

"Function" as filter was used to code students' responses where they expressed curiosity about the technological capabilities of ChatGPT. This code appeared most often in the debrief interview section when students were asked, “What questions, if any, does ChatGPT raise for you?” Here, students expressed curiosity about ChatGPT’s sources, asking, “Where is the information coming from?", "What biases does it have?”, “How does it pull from the Internet?”, and “What kind of database is it pulling from?” They also wondered about how ChatGPT worked, asking, “What can’t it do?”, “How does it work?”, “Does it have different levels of writing?”, “Does it generate a different response for everyone?”, and “Is it accessible? Is it free?” Finally, students wondered about its functionality in the future. They asked, “How much more advanced will this be in a couple of years?” and “Will it get overused?” Under "function" as filter, students illustrated critical thinking about ChatGPT use and concerns about its impact on their academic and non-academic futures.

"Circumstance" as Filter

In their evaluations of Chat GPT texts, students leveraged many kinds of knowledge related to circumstance. We used the code “circumstance" as filter when students responded by filtering information through their academic identity, their major, or content from a specific class or professor. As we used the code, the team came to realize that this description matched well with models of rhetorical situation since students were thinking about audience, content, purpose, author, and timing.

Indications of this filter included mentions of class content or professor expectations, understanding of genres they were working with, and considerations of how ChatGPT “understood” the assignment. Students also considered how the situation would influence whether or not they would use ChatGPT at all. For example, one student mentioned “if this is a last resort, you know, I'd throw it in there, and I probably still get like a C minus, maybe, but it's really not that good. But for a discussion post this would be good enough.” Another participant noted that the text met the expectations of their genre but that it could have been more useful pedagogically by adding numerical examples, “It includes most of the things that you'd expect in a psychological as abstract. I feel like, maybe a bit more like numbers or specific statistics would be nice, even just generating those for an example, although it has no data to base it on overall.” Finally, another student considered their knowledge of professor expectations, saying, “Sometimes professors have their own things that they want you to remember, or they have their own examples that they want you to remember, and they might hope that you kind of personalize your paper or personalize your assignment to fit the course and fit specifically what you've been learning.”

The results from this filter give us some insight into how students understand the rhetorical situation of academic writing. Students rely on expectations set by instructors about the quality of their work and the extent to which course content should be explicitly included in their writing products. In addition, the way that courses talk about genre served as a tool that students used to determine if ChatGPT could be used. Commonly, discussion posts seemed like an area where there was more comfort using ChatGPT, as opposed to papers where they felt their unique ideas were more highly valued or looked for. A few students noted that they were more likely to use Chat GPT in required foundational courses or general education coursework, “I have used before in an academic class particularly for like any liberal education that are not related to economics for in terms of a time-saving aspect as well as to get a general understanding.” This alerts us that students are less likely to use ChatGPT when they find the information valuable or directly related to their major or area of study. As we see elsewhere, students care about the value of their education, and, interestingly, it seems that when they don’t find value in a class seemingly unrelated to their major they may be more likely to use AI tools to help them save time. Overall, sixty-two student comments were coded in this category.