The Black-Boxed Ideology of AWE

Antonio Hamilton and Finola McMahon

Themes of Black-Boxing

Theme 1: black-boxing occurs through inconsistent features

Table 1: Assessment Features Organized by Automated Writing Evaluation Program

| Features | Automated Writing Evaluation Program | |||||||

|---|---|---|---|---|---|---|---|---|

| WriteToLearn | Grammarly | Virtual Writing Tutor | Criterion | ProWritingAid | Outwrite | Paper Rater | MI Write | Plagiarism Checker | X | X | X | X | X | X |

| Grammar | X | X | X | X | X | X | X | X |

| Punctuation | X | X | X | X | X | |||

| Spell Check | X | X | X | X | X | X | X | X |

| Conciseness/Cohesion | X | X | X | X | ||||

| Word Choice/Vocab | X | X | X | X | X | X | X | X |

| Word Count | X | X | ||||||

| Tone | X | X | ||||||

| Formality Level | X | X | X | |||||

| Fluency | X | X | X | X | ||||

| Clarity | X | |||||||

| Topic Sentence | X | X | X | |||||

| Check Paraphrase | X | X | ||||||

| Sentence length/variance | X | X | X | X | ||||

| Argument Strength | X | |||||||

| Thesis | X | X | ||||||

| Audience Checker | X | X | X | |||||

| Domain | X | |||||||

| Redundant content | X | X | X | |||||

| Conventions | X | X | X | |||||

| Organization | X | X | X | |||||

| Ideas | X | |||||||

| Extraneous Information | X | |||||||

| Passive Voice | X | X | X | |||||

| Pacing Check | X | |||||||

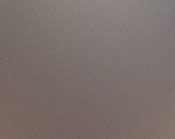

As shown in Table 1, the features offered by the eight programs varied widely. Of the 24 features identified, only three appeared across all eight AWE: "Grammar," "Spell Check," and "Word Choice/Vocab." These more traditional features tended to be left self-explanatory or were defined briefly. By leaving these terms' definitions to the user's interpretation, the features are still black-boxed due to the lack of specificity. For example, "Grammar" and "Spell Check" may appear to be more universal at first glance. The definition of 'correct' grammar can vary based on context, language, and audience, but each program largely implements the category to encourage use of current-traditional Standard Edited American English (SEAE) grammatical norms, such as syntax and morphology. "Spell Check" is an easier feature to define, with each program generally encouraging the use of SEAE spelling (Conference on College Composition and Communication, 1974). "Word Choice/Vocab" is less clear as the target audience and goals of each AWE vary. For example, WTL and MI Write are targeted towards K-12 versus VWT which is targeted towards English as a Second Language (ESL) students. The creator of VWT, Nicholas Walker, provided the most direct insight into the function of "Word Choice/vocab" as a feedback feature. As he was designing the software for use in his own ESL classroom, he identified words commonly used and commonly misspelled by his students and programmed the algorithm to pay particular attention to those words, rather than relying on machine learning to identify those themes independently. While "Grammar," "Spell Check," and "Word choice/Vocab" were included features in all eight programs, they were not all rhetorically positioned in the same way. Beyond the three features present in every AWE, two additional features were present in the majority of programs: "Plagiarism" (6/8 programs) and "Punctuation" (5/8 programs).

If we consider the unacknowledged inconsistencies of similar/identical features across AWE, AWE are collectively black-boxed. In particular, the rest of the features noted were included by four or fewer AWE. Some of these features were not clearly defined and in cases such as MI Write and Criterion, where the feature is behind a paywall, we were unable to determine the criteria upon which the feature was based. Examples of this included "Extraneous Information" from WTL and "Organization" from MI Write, Criterion, and WTL.

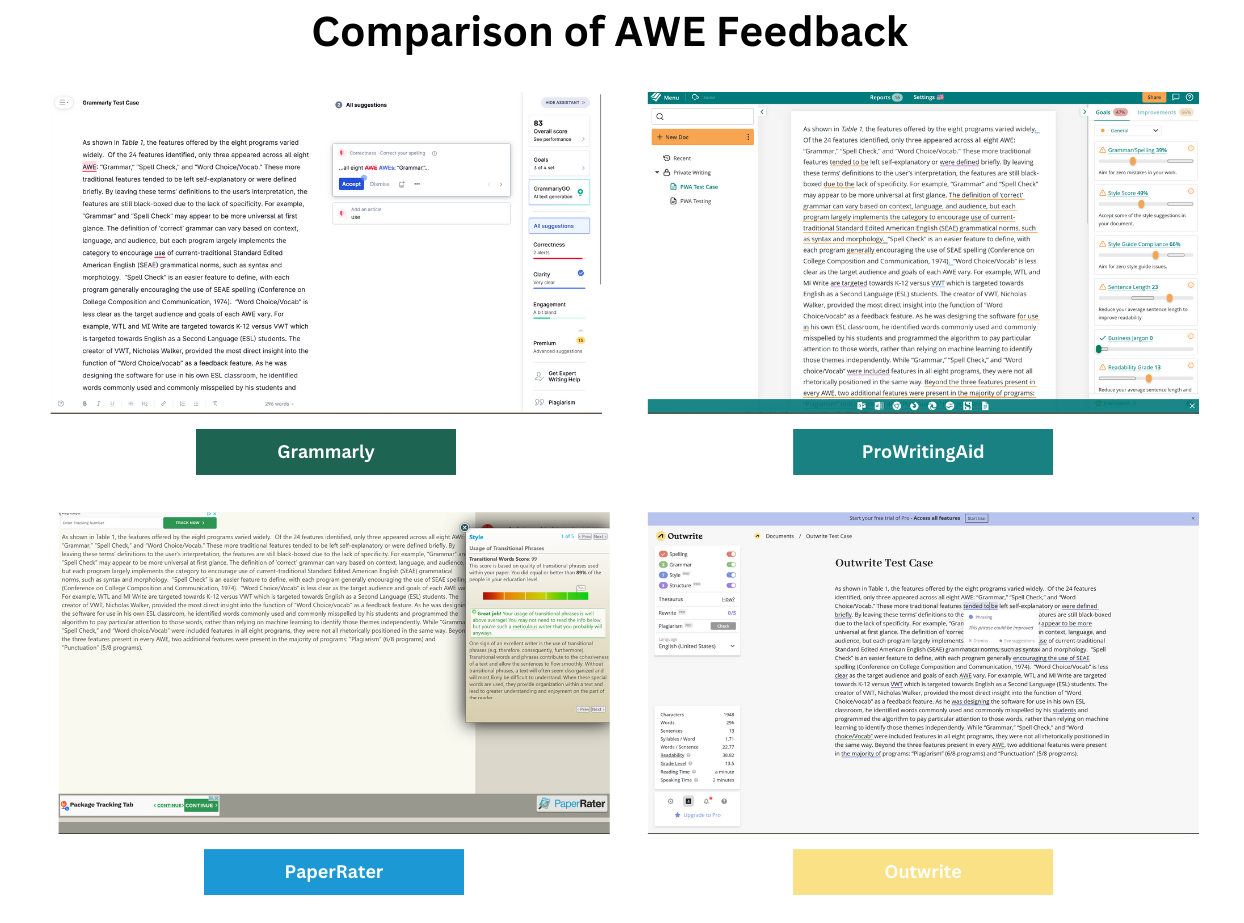

To further highlight the disparity in features across AWE, we note the way that the "Cohesion/Cohesiveness" feature functions across programs. While this feature is included by three AWE, each used the feature differently. VWT defined "Cohesion" based on transition words, such as "transitions indicating time order," "contrast," "cause and effects," etc. Grammarly appears to assess "Conciseness" based on efficient phrasing, they provide the example of "There are some who argue that Macbeth is an antihero, not a villain," with Grammarly providing the suggestion that "There are some who argue" be changed to "Some argue." PWA defined the feature based on many types of consistency, such as "spelling consistency," "hyphenation consistency," and "capitalization consistency." The "Cohesion/Cohesiveness" category functions as a clear example of a static abstraction, used by multiple programs for very different purposes. The implications of these inconsistencies will be further explicated in our discussion section.

Theme 2: black-boxing occurs through hyper-jargonizing

Table 2: Definitions of AI Technology Organized by Automated Writing Evaluation Program

| AI TECHNOLOGY CHART | ||

|---|---|---|

| Program | Type of AI Used | AI Technology Definition (described by the website) |

| WriteToLearn | Latent Semantic Analysis | "The KAT engine is based on the mathematical approach known as Latent Semantic Analysis (LSA), which provides a sophisticated computer analysis of text" (Grammarly). |

| Knowledge Analysis Technologies | "The KAT engine evaluates the meaning of text by examining whole passages. The KAT engine is based on Pearson's unique implementation of Latent Semantic Analysis, an approach that infers semantic similarity of words and passages by analyzing large bodies of relevant text. LSA can then understand the meaning of text in much the same way as a human reader" (Grammarly). |

|

| Grammarly | Natural Language Processing |

No definition is provided, only multiple articles that give an overview that they use NLP. |

| Virtual Writing Tutor |

Latent Feature Analysis | "I have developed a special method of quality detection that I call Latent Essay Feature Analysis (LEFA). I use it to discover what makes a great essay great" (Walker). "First we extract and measure a range of latent features from a model essay,: the organization, cohesion devices, vocabulary choice, and writing quality. Those measurements are stored in the database as a series of targets" (Walker). |

| Model Essay Proximity Scoring | "Then, I use Model Essay Proximity Scoring (MEPS) to determine how closely your essay resembles the ideal essay response for each test prompt" (Walker). "When a student submits an essay on the same topic, it measures those same features and generates a proximity score. How close is the submitted essay to the ideal essay? The feedback and band scores tell you how close you are to the ideal" (Walker). |

|

| Criterion | Natural Language Processing | An area of computer science and AI design "concerned with giving computers the ability to understand text and spoken words" through a use of "computational linguistics […] with statistical, machine learning, and deep learning models" (IBM Cloud Education, 2020) |

| E-rated Scoring System |

A specific NLP based system created by ETS. This system is used across many ETS applications and tests. |

|

| ProWritingAid | Natural Language Processing | An area of computer science and AI design "concerned with giving computers the ability to understand text and spoken words" through a use of "computational linguistics […] with statistical, machine learning, and deep learning models" (IBM Cloud Education, 2020) |

| Outwrite | Unspecified | N/A |

| Paper Rater | Ginger | "Ginger corrects mistakes, but also gives explanations, language tips and personalised advice to improve your writing and style. It also suggests alternative ways to express your ideas with AI-based Synonyms and a Rephraser that works like a thesaurus for whole sentences" (Ginger Software). |

| MI Write | PEG (Project Essay Grade) | "As with most automated scoring software, PEG utilizes a set of human-scored training essays to build a model with which to assess the writing of unscored essays. Using advanced statistical techniques, PEG analyzes the training essays and calculates more than 500 features that reflect the intrinsic characteristics of writing, such as fluency, diction, grammar, and construction. Once the features have been calculated, PEG uses them to build statistical and linguistic models for the accurate prediction of essay scores. MI enhances scoring accuracy by using extensive custom dictionaries and word lists, producing results that are comparable to MI's well-trained and expert human readers" (Measurement Incorporated). |

Similar to the inconsistencies in the "Cohesion/Cohesiveness" feature, there were operationalized words without referents with unclear definitions used by the companies in their descriptions of the technology used to power AWE software, otherwise known as jargon. Hirst (2003) notes the negative impact that jargon can have as it is often defined negatively as "the pretentious, excluding, evasive, or otherwise unethical and offensive use of specialized vocabulary" (p. 202). This use of jargon was present in the features and interfaces of the programs, as well as the promotional and informational material about the AWE, often including generalized definitions of the AI Technology used, which can be found in Table 2. For example, Grammarly noted that their software uses "Natural Language Processing" (NLP) but does not define the type of NLP used. They instead provide the general term, relying on jargon to black-box their software. Similarly, each of the algorithms' interfaces relied on static abstractions to categorize the features they studied, without providing details of what exactly the feature was calculating. For example, four of the AWE claim to calculate "Fluency" but there is no explanation of what that term means or how it is calculated.

Outwrite stands out slightly in comparison to the other programs in that they provide no details on their software's programming. While the other programs provide information in general terms (e.g. stating that they use "Natural Language Processing" or "Latent Semantic Analysis"), Outwrite makes no attempt to clarify the programming process.

Additionally, Grammarly, PWA, and Criterion appear to attempt to "unbox" their software by providing a wide variety of articles about their features and software. Grammarly and PWA's articles spoke in very general terms about technological possibilities in AWE and writing feedback, without specific grounding in their use. They housed these articles on their websites, while Criterion has many published academic articles about their software and their scoring system (used across multiple ETS services). Notably, these AWE are all targeted at schools and academic settings. The more public-facing, less academic programs did not provide the same level of attempted transparency.

Theme 3: Black-boxing occurs through proprietary and subscription services

Table 3: Access Organized by Automated Writing Evaluation Program

| Program Accessibility | |||

|---|---|---|---|

| AWE | Paywall | Access | Target Users |

| Write To Learn | Paywalled $14.95 per student each year $3500 for full day training $1500 for half day training $300 for consultation |

No access, must purchase to use | Students started from 4th grade to 12th grade (Administrators have to purchase) |

| Grammarly | Partial Paywall Free – for individuals that only want spelling grammar, punctuation checked Premium ($12.00/month) – for individuals that want all of Grammarly's assessment features Business ($12.50/member/month) – For companies looking to use Grammarly for 3-149 individuals |

Access to the free version, but must download and install Grammarly into the software you wish to use it for | Targeted towards everyone, but also students and businesses |

| Virtual Writing Tutor | No Paywall Non-members can have 1000 words assessed Members (which is free) can have 3000 words assessed |

Instant access, can immediately paste writing or type text into text box | Targeted towards ESL, students primarily |

| Criterion | Paywalled grade level, the number of students using the program in a class, and the number of essays assessed |

Program access upon payment (only purchasable through a school)(Web-based service, used through the ETS Criterion website) | 3 categories: k-12 students, higher education students, "global customers" |

| ProWritingAid |

Partial Paywall Free Premium - $79/year (or $20/month, $399 for lifetime) Premium+ - $89/year (or $24/month, $499 for lifetime) |

Immediate access (Multiple access options: their website, a browser extension, a plug-in for word processors, and a desktop app) | Targeted towards everyone, but also students and businesses |

| MI Write | Paywalled (Quote not obtained) |

Demo access upon formal, request Program access upon payment | Students grades 3-12 |

| Paper Rater | Partial Paywall Free Readability indices only available to premium members for $7.95/month |

Immediate Access | Grades 1-12, undergraduate students, masters students, PhD students, and other |

| Outwrite | Partial Paywall Essential – Free Pro – $9.95/month for yearly (or $24.95/month for monthly) Teams – $7.95/user/month for yearly (or $14.95/user/month for monthly) |

Immediate access (Multiple access options: their website, a Chrome extension, an Edge extension, a Google docs add-on, a Word add-in, and an API proofreader) | The general public and corporate writers |

Note. This chart presents information on how individual users are able to access the services of the programs via paywall options and each AWE's reported target audience.

VWT is the only AWE we studied which is completely free. It includes a two-tiered system of members and non-members, both tiers are free. They limit the word count to 1000 words for non-members and 3000 for members. Conversely, MI Write, WTL, and Criterion are all fully paywalled, providing no free option. They also happen to all target academic audiences specifically, and all three must be purchased through a school. Outwrite, Grammarly, Paper Rater, and PWA all provide tiered options allowing for free use with limited features or paid use with additional features. Additionally, PWA is the only one of the services which offers a lifetime purchase option. For each service which provides at least one free option, listed in Table 3 above, users can access the software immediately, though some require users to sign in. Conversely, MI Write, WTL, and Criterion do not provide any way to view or interact with their interface on their websites. Only MI Write provides an option to request a demonstration. This means that users have limited options for testing the software, and no option in the case of WTL and Criterion, further obfuscating and black-boxing the algorithm. This obfuscation allows fully paywalled programs, such as these, to control the narrative of how their software is perceived by potential users. Since users cannot create their own narratives based on their direct experiences without the influence of the creators, the algorithms are further black-boxed. In the process, the creators perform a perceived transparency while limiting independent access to the AWE.

In the tiered payment systems, certain features are restricted to paying customers only, such as "Plagiarism" checks. All four of the tiered AWE provide "Plagiarism" as a service, but not for free. Outwrite and Grammarly provide "Plagiarism" checks to paying customers while Paper Rater and PWA provide users with tokens they can use to check, with paying tiers receiving a certain number of tokens to start and free users paying for tokens when needed. Many of the features provided for paid tiers alone were focused on higher level writing concerns, like "Tone Suggestions" for Grammarly and "Sentence Rewriting" for Outwrite.