Interfacing Chat GPT

Desiree Dighton

Classroom Heuristic Development Methodology

In the class, we engaged with the GPT-3.5 interface via https://chat.openai.com/. At this time, the ChatGPT interface contained two GPT options for users to select. GPT-4 was accessible as a paid tier, so students used the free GPT-3.5 version in classroom activities. I provided students with a survey before and after they engaged with ChatGPT, which they did on their own devices in small groups with semi-structured activities over two hour-long class periods. In summarizing these experiences, I've integrated my discussion with anonymized aggregate, paraphrased, and quoted student responses from surveys, activities, and observations. Leading up to these activities, we refrained from discussing ChatGPT, and I set aside two class periods as ChatGPT interface heuristic analysis workshops. In class, I provided informed consent, including the option to not participate in any and all parts of the survey and activities.

This class was fully enrolled with 24 students. Students were traditional undergraduates, primarily from small towns in rural and coastal eastern North Carolina. A few students reported being from urban Southeast or Northeast regions and one student reported being a Mexican international student. Most students identified as female, a few students identifying as male or non-binary. Most students identified as white with about 1/3 of the classroom identifying as Black, Latinx, or by one or more non-white ethnicities.

As part of the ChatGPT survey, I asked students several questions related to their ideas and attitudes toward writing. For example, I asked them to describe their writing and writing processes, to describe characteristics of ‘good writing,’ and to discuss their understanding and impressions of ChatGPT. Just over half of students considered themselves good writers (57.1%) with slightly more students claiming to enjoy writing (61.9%). When asked to use three words to describe their writing to someone else, many students used descriptors related to simplicity, efficiency, safety, logic, organization, and clarity. Similarly, students identified writing strengths using language that maps onto ‘professionalism’. Professionalism, Selfe and Selfe observed, evokes and reproduces white, middle-class corporate values as ideals, and many student responses used “professionalism” to describe their understanding of good writing. Of the more individualized descriptors, students described their writing as “creative,” “enlightening,” “appealing,” “insightful,” “well-spoken,” “feminine,” “dark,” “expansive,” “bright,” “fun,” and “unique.” These descriptors were more likely to appear uniquely in individual responses while the professionalism values were more prevalent throughout student responses to several questions. When asked to identify their greatest strengths as writers from multiple choice options I provided, students chose creativity (13), organization (13), and originality (12). On the whole, the majority believed using correct grammar/spelling (11), incorporating and citing research (11), and doing a final proofread (10) were their greatest weaknesses. When asked about aspects of their writing process, most students said they brainstormed (12), performed preliminary research (15), drafted (13), and sought out feedback from a professor, tutor, or friend/family member (16). Perhaps especially revealing, few students reported revising (7) and incorporating and citing research (4) as part of their usual writing process for school or work.

In the class I surveyed, 81% of students stated they were aware of ChatGPT while 19% of students stated they’d never heard of it. When asked to associate three words with ChatGPT, responses expressed polemical attitudes: “helpful” appeared as frequently as “cheating” with terms like “robotic,” “answers,” “easy,” “awesome,” and “evil” swirling together in a complicated morass of feelings and impressions. When asked to explain what ChatGPT does, most students stated some version of “it gives you answers,” “it knows everything,” “it helps you with assignments,” or “it writes your paper for you.” Several students stated openly that they didn’t know what it did (4). When asked to describe how it generated responses, students either indicated they didn’t know or that it worked “through AI” with a few students referring to technical aspects like “software,” “metadata and analysis,” “uses an algorithm,” and “has a lot of data it has learned from the internet.” When asked to identify their feelings about ChatGPT, students reported feeling “excited” (10), “intimidated” (9), “distrustful” (9), and “optimistic” (8). Over half of the students surveyed said they had used ChatGPT and been satisfied with the results. When asked what had satisfied them, students provided a range of responses, including praise for ChatGPT’s ability to provide useful outlines, help them learn things like cooking and shaving, and, in sum, answer all the questions they might have about school, work, and life.

This small but significant group of student responses and impressions showed broad satisfaction with ChatGPT's speed and efficiency, especially by its personalized responsiveness. Watching some students interacting with ChatGPT for the first time, I saw their dazzled expressions as GPT’s streaming response seemed to magically fill the interface without their own fingers hitting any keys. These students reported that they had not used ChatGPT up to this point for a variety of reasons, including concern over the consequences in school or more broadly (6), lack of awareness (2) or fear of disappointing teachers and other authorities (2). At the time, only 23.8% (5 of 24) of students admitted to using ChatGPT to write for school or work. Those students were split on feeling positively or negatively about that experience. More than half of the students, 52.4%, said they’d like professors to teach them how to use ChatGPT, while 28.6% opposed to ChatGPT instruction from their teachers and about 20% were ambivalent or conflicted about classroom use and instruction. When asked if ChatGPT could write better than most people, 57% of students thought it could not write better than most people; however, 52% of students thought ChatGPT could write better than they could.

When asked about ethical concerns, 76% of students worried that using ChatGPT would be cheating and/or misrepresenting intellectual property. A few students expressed concerns about GAI being scary and/or dangerous to humanity. A few responses identified potential risks to their individual learning, intellectual, and/or creative growth. When students were asked if using ChatGPT didn’t have negative consequences, would they prefer to use it or a similar technology to do most or all their writing, 61.9% said they wouldn’t use ChatGPT for most writing tasks, while 38.1% answered affirmatively. Students who indicated they wouldn’t use ChatGPT explained that they didn’t want to give up their own writing pleasure, originality, creativity, learning, and/or humanity. Students who said they would use ChatGPT for most writing tasks remarked on GPT’s efficiency, ease of use, and clarity as necessary to overcoming or masking perceived flaws, like laziness or ‘bad’ writing skills, as well as the pressure to use an innovative technology for perceived advantages. Finally, most students responded that they believed they would need to use GAI like ChatGPT within the next five years to be successful in college (76.2%) and in most professional environments (81%) that required writing. This survey provided valuable, partial glimpses into student attitudes, beliefs, and values amid this paradigm shift and made students’ vulnerability to this particular technology acutely visible to me. Clearly, some students welcomed ChatGPT as an innovation that could help them without judgement and provide some advantage to help them achieve success and safety in an increasingly challenging landscape. Others expressed concern and anxiety over what ChatGPT may take from their experiences and the changes it may bring to the world they’ll need to navigate. It’s worth restating that regardless of their personal preferences for using or refusing ChatGPT, most believed they would be compelled to use GAI in academic and professional contexts.

Heuristic Discussion

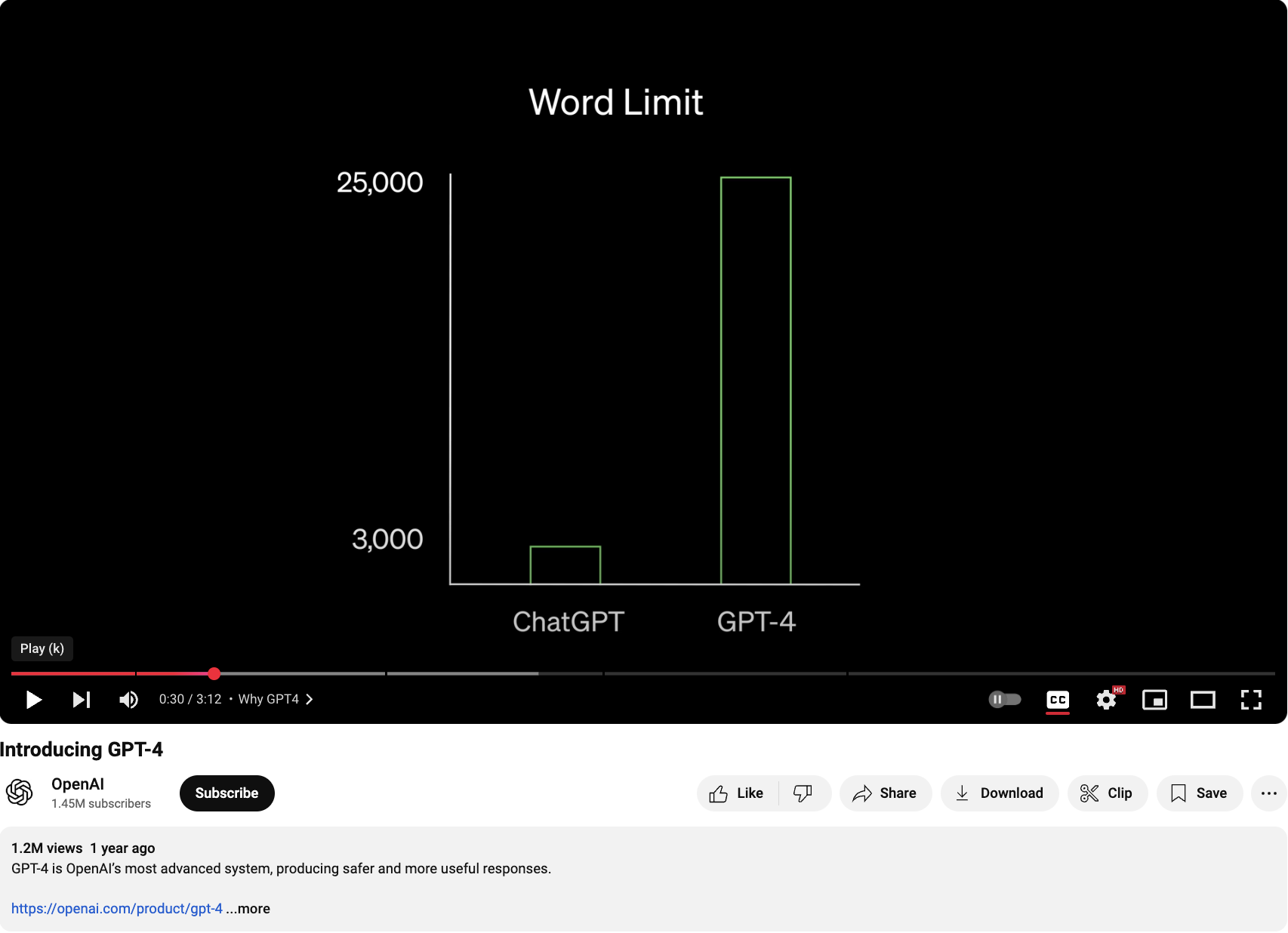

As part our in-class activities, I asked students to watch OpenAI’s March 15, 2023, promotional video. This 3-minute video is a masterclass of rhetorical maneuvers (Figure 6). Towards the end, a woman says, “We think that GPT-4 will be the world's first experience with a highly capable and advanced AI system. So, we really care about this model being useful to everyone, not just the early adopters or people very close to technology.”

From this moment forward, OpenAI appeals to the general public to become its ideal users—all of us are welcome, regardless of technical skills or subject-matter expertise. This promotional video acts as a secondary interface, bartering access between the public and the product through affective maneuvers. In the video, the OpenAI spokesperson continues, “It is really important to us that as many people as possible participate so that we can learn more about how it can be helpful to everyone.” With this, OpenAI appeals to the public’s shared values that being helpful and contributing is good as a way to gain our acceptance and use of its product, ChatGPT. When students were asked about their impressions of this promotional video, responses were mostly positive. Students felt the video provided more information about ChatGPT’s capabilities, its improvements, and, perhaps most notably, its ability to learn from them while also serving larger innovative and humanitarian purposes.

When asked to provide feedback on ChatGPT’s web-based interface, most students indicated that they liked its simplicity and ease of use: the speed of its responses, the absence of buttons and links, and the conversational interaction. A few students said they especially liked that the interface design feels and looks familiar, like “texting.” Students responded positively to other visual aspects of the design, such as the streaming response and the chat archive options. When asked what they disliked about the interface, nearly half of students said there wasn’t anything about the interface they didn’t like, while a few students said it was too basic, bland, or simple, or its responses took too long to generate. When asked what they would change about GPT’s interface design or functionality to better reflect their preferences and desires, most students stated they would not change anything. Some students responded with various design ideas like changing the re-generate feature and memory for better storytelling, providing options for restricting the date-range of data used in the responses, and adding functions to better determine the trustworthiness of information. One student response stood out with the suggestion for “a design that will appeal to the outside audience.” This last response is one that I’d like to linger on before concluding.

As students participated in these classroom activities, I wondered how each one oriented themselves to the bodies, identities, and values embedded in ChatGPT’s interfaces. This student’s response—a design that would appeal to an outside audience—evoked a more nuanced perspective on ChatGPT’s interface design than other classmates who easily accepted it as is, seemingly without question that it could or should look or function any differently. Unique responses like this student’s sense that designs could be influenced by non-dominant but important perspectives and needs could fuel dynamic GAI classroom discussions and interactions that expand critical awareness and build critical AI literacies—the competence and perhaps even the agency to resist the norming potential of technologies we access through interfaces. Given these student responses, it was clear to me how vulnerable students were to the pull of GAI’s affective flirtations and pressures, regardless of individual ethical concerns or personal use preferences.

Developing critical AI literacies in our classrooms may counteract some of the negative potential consequences of GAI on classroom learning and writing skill acquisition. If students are taught to deepen their interface analysis skills and consider dominant narratives as powerful rhetoric rather than stable truths, they develop the critical distance necessary to question GPT's results—a responsibility even OpenAI says it wants from users. We can break the mesmerizing spell of GAI's brilliance, in part, by focusing on its materiality and the rhetoric circulating in its ambience. The materiality of its interface is the seat of its power. By focusing our attention on the interface's materiality and circulation, we discern GPT's values, not least of which is its desire to situate us as passive consumers and uncompensated producers in its data economies. GPT’s interface tightly controls user agency through acting as an access point to a "generic" response without much user control or system transparency in the processes and sources that determine its responses. These and other values allow GPT to remake our human productions of culture and information into de-contextualized data that it can return in personalized responses. This remaking of the concrete and particular into the abstract and general is how GPT appears to create new written texts. GAI’s interfaces and circulation have been designed with overt, persistent affective flirtations—pleas for friendship, polite and apologetic hedges, personalized responses, and the circulatory magic of GAI’s innovative power to provide personal and social advantages. These affective flirtations disguise the application’s profit-based goals and limit user agency while norming us to practices that feed data economies and profit tech companies.